In collaboration with Pierre Labendzki

Deepfake generation built with a decoder.

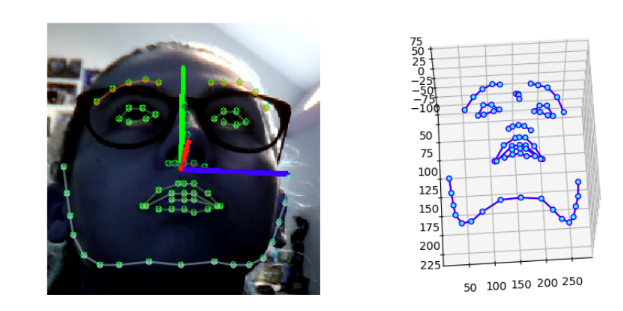

This application takes a video as input, extracts the faces present in it and extracts the chosen characteristics: opening between the lips, facial expression, orientation of the head (Euler’s angle) and size of the boundary box (masks of faces in order to remove background).

The facial expression is done with a CNN.

When the features are gathered, we can use our decoder to generate images based on the faces extracted from the video.

Example of the detection of the different face features and the 3d representation of it.

Example of the detection of the different face features and the 3d representation of it.